Evaluating the Effectiveness of LLM-Evaluators (aka LLM-as-Judge)

|

Hey friends, I've been thinking and experimenting a lot with how to apply, evaluate, and operate LLM-evaluators and have gone down the rabbit hole on papers and results. Here's a writeup on what I've learned, as well as my intuition on it. It's a very long piece (49 min read) and so I'm only sending you the intro section. It'll be easier to read the full thing on my site. I appreciate you receiving this, but if you want to stop, simply unsubscribe. 👉 Read in browser for best experience (web version has extras & images) 👈 LLM-evaluators, also known as “LLM-as-a-Judge”, are large language models (LLMs) that evaluate the quality of another LLM’s response to an instruction or query. Their growing adoption is partly driven by necessity. LLMs can now solve increasingly complex and open-ended tasks such as long-form summarization, translation, and multi-turn dialogue. As a result, conventional evals that rely on n-grams, semantic similarity, or a gold reference have become less effective at distinguishing good responses from the bad. And while we can rely on human evaluation or finetuned task-specific evaluators, they require significant effort and high-quality labeled data, making them difficult to scale. Thus, LLM-evaluators offer a promising alternative. If you’re considering using an LLM-evaluator, this is written for you. Drawing from two dozen papers, we’ll discuss:

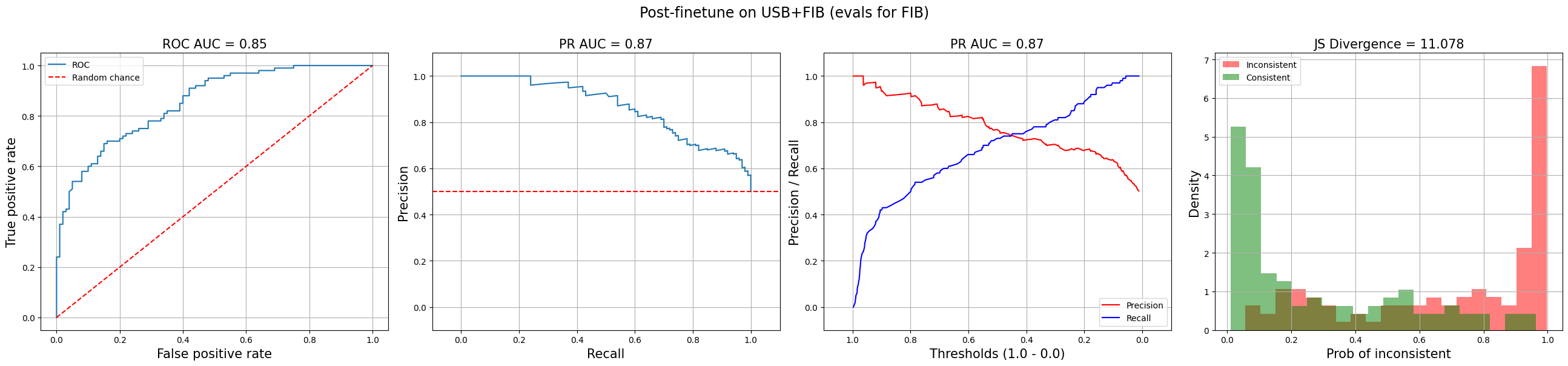

After reading this, you’ll gain an intuition on how to apply, evaluate, and operate LLM-evaluators. We’ll learn when to apply (i) direct scoring vs. pairwise comparisons, (ii) correlation vs. classification metrics, and (iii) LLM APIs vs. finetuned evaluator models. Key considerations before adopting an LLM-evaluatorBefore reviewing the literature on LLM-evaluators, let’s first discuss a few questions which will help us interpret the findings as well as figure out how to use an LLM-evaluator. First, what baseline are we comparing an LLM-evaluator against? For example, if we’re prompting an LLM API, are we comparing it to human annotators or a smaller, finetuned evaluator model? It’s easier to match the former than the latter on accuracy and speed. Most folks have human annotators as the baseline. Here, we aim for the LLM-human correlation to match human-human correlation. Compared to human annotators, LLM-evaluators can be orders of magnitude faster and cheaper, as well as more reliable. On the other hand, if your baseline is a finetuned classifier or reward model, then the goal is for the LLM-evaluator to achieve similar recall and precision as a finetuned classifier. This is a more challenging baseline. Furthermore, LLM-evaluators are unlikely to match the millisecond-level latency of a small finetuned evaluator, especially if the former requires Chain-of-Thought (CoT). LLM-evaluators likely also cost more per inference. Second, how will we score responses via LLM-evaluators? There are at least three approaches that provide varying levels of accuracy, reliablity, and flexibility. Direct scoring evaluates a single response without needing an alternative for comparison. This makes it more versatile than pairwise comparison. Because it scores output directly, it’s more suitable for objective assessments such as measuring faithfulness to a source text or detecting policy violations such as toxicity. Pairwise comparison chooses the better of two responses or declares a tie. It’s typically used—and more reliable—for subjective evals such as persuasiveness, tone, coherence, etc. Studies show that pairwise comparisons lead to more stable results and smaller differences between LLM judgments and human annotations relative to direct scoring. Reference-based evaluation involves comparing the response being evaluated to a gold reference. The reference contains the information that should be included in the generated response. The LLM-evaluator evaluates how close the generated response matches the reference, essentially doing a more sophisticated form of fuzzy-matching. These three approaches are not interchangeable. Some evaluation tasks, such as assessing faithfulness or instruction-following, don’t fit the pairwise comparison paradigm. For example, a response is either faithful to the provided context or it is not—evaluating a response as more faithful than the alternative address the eval criteria. Similarly, reference-based evaluations require annotated references, while direct scoring and pairwise comparisons do not. Finally, what metrics will we use to evaluate LLM-evaluators? Classification and correlation metrics are typically adopted in the literature and industry. Classification metrics are more straightforward to apply and interpret. For example, we can evaluate the recall and precision of an LLM-evaluator at the task of evaluating the factual inconsistency or toxicity of responses. Or we could assess the LLM-evaluator’s ability to pick the more preferred response via pairwise comparison. Either way, we can frame it as a binary task and rely on good ol’ classification metrics.

Diagnostic plots for classification tasks (source) Correlation metrics are trickier to interpret. Some commonly used correlation metrics include Cohen’s κ (kappa), Kendall’s τ (tau), and Spearman’s ρ (rho). Cohen’s κ measures the agreement between two raters on categorical data, taking into account the probability of agreement occurring due to chance. It ranges from -1 to 1, with 0 indicating no agreement beyond chance and 1 indicating perfect agreement. It is generally more conservative compared to other correlation metrics. Values of 0.21 - 0.40 can be interpreted as fair agreement while 0.41 - 0.60 suggest moderate agreement. Kendall’s τ and Spearman’s ρ measures the strength and direction of the association between two rankings. It ranges from -1 to 1. -1 indicates perfect negative correlation, 1 indicates perfect positive correlation, and 0 suggests no correlation. Kendall’s τ is more robust to outliers due to its focus on the relative ordering of pairs while Spearman’s ρ is more sensitive to the magnitude of differences between ranks. They typically have higher values compared to Cohen’s κ since they don’t adjust for chance agreement. When choosing a metric, consider the type of data you’re working with. Cohen’s κ is more suitable for binary or categorical data when you want to assess the agreement between raters while adjusting for chance agreement. However, it may over-penalize ordinal data, such as a Likert scale. If your data is ordinal, consider Kendall’s τ or Spearman’s ρ instead. I tend to be skeptical of correlation metrics. They don’t account for chance agreement and thus could be overoptimistic (though Cohen’s κ is an exception). Furthermore, compared to classification metrics, it’s less straightforward to translate correlation metrics to performance in production. (What’s the evaluator’s recall on bad responses? What about false positive rate?) Thus, where possible, I have my evaluators return binary outputs. This improves model performance while making it easier to apply classification metrics. Continue reading here. |

Eugene Yan

I build ML, RecSys, and LLM systems that serve customers at scale, and write about what I learn along the way. Join 7,500+ subscribers!

Hey friends, After repeating myself for the nth time on how to build product evals, I figured I should write it down. There are three basic steps: (i) labeling a small dataset, (ii) aligning our LLM evaluators, and (iii) running the experiment + evaluation harness with each config change. I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 First, label some data It begins with sampling...

Hey friends, What makes an effective principal engineer or scientist? I’ve distilled what I’ve observed from role models and quoted some of their advice below. While my perspective is Amazon-centric, these ideas should also apply to most principal tech IC roles. As always, use your best judgment and assess if this advice applies to you and your situation. I appreciate you receiving this, but if you want to stop, simply unsubscribe. 👉 Read in browser for best experience (web version has extras...

Hi friends, I got nerdsniped when I first heard about Semantic IDs. The idea is simple: Instead of using random hash IDs for videos or songs or products, we can use semantically meaningful tokens that an LLM can natively understand. I wondered, could we train an LLM-recommender hybrid on the rich behavioral data that makes today’s recommender systems so effective? I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web...