Building a Multi-Agent Framework to Summarize News with MCP & Q

|

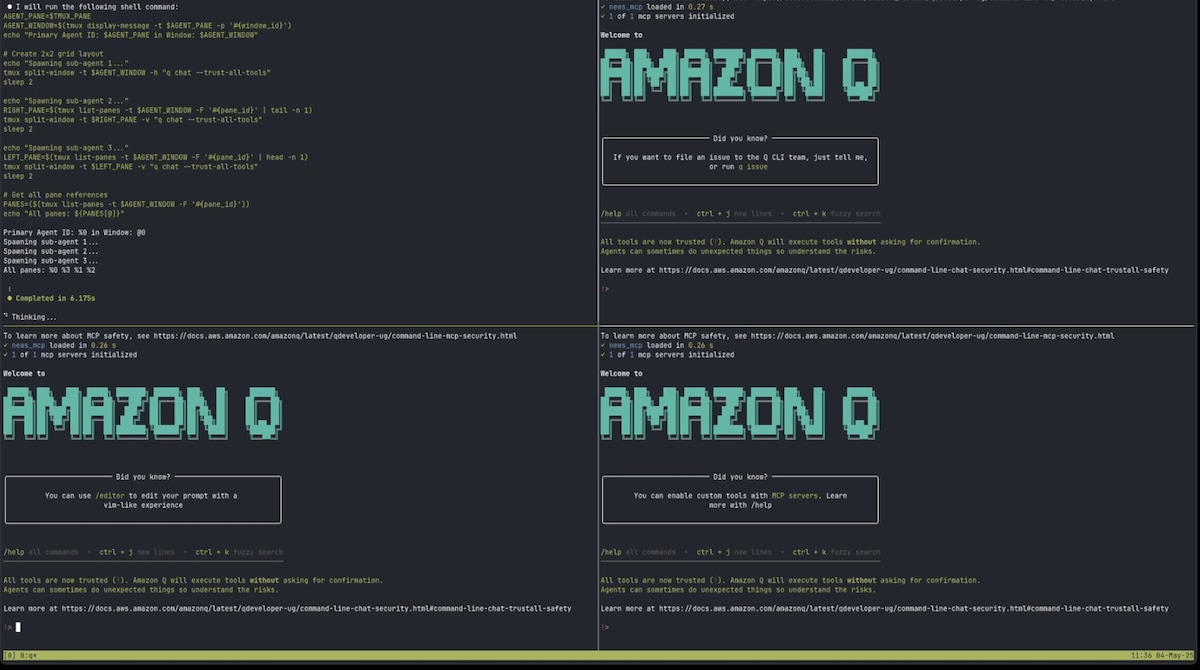

Hey friends, To better understand MCPs and agentic workflows, I built a news agent to help me generate a daily news summary. It’s built on Amazon Q CLI and MCP. The former provides the agentic framework and the latter provides news feeds via tools. It also uses tmux to spawn and display each sub-agent’s work. P.S. If you’re interested in topics like this, my friends Ben and Swyx are organizing the AI Engineer World’s Fair in San Francisco on 3rd - 5th June. Come talk to builders sharing their experience deploying AI systems in production. Here’s a BIG DISCOUNT for tickets. I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 At a high level, here’s how it works. Here, we’ll walk through how the MCP tools are built and how the main agent spawns and monitors sub-agents. Each sub-agent will process its allocated news feeds and generate summaries for each feed. The main agent then combines these summaries into a final summary. There’s also a quick three-minute demo at the end, so you can see how it works. Setting up news MCPsEach news feed has its own rss reader, parser, and formatter. These handle the unique structure and format of each rss feed. (Perhaps in the future we can just use an LLM to parse these large text blobs reliably and cheaply.) For example, here’s the code for fetching and parsing the Hacker News rss feed: The MCP server then imports these parsers and sets up the MCP tools. MCP makes it easy to set up tools with the Setting up news agentsNext, we’ll set up a multi-agent system to parse the news feeds and generate summaries. We’ll have a main agent (image below, top left) that coordinates three sub-agents, each running in a separate tmux window (image below; bottom-left and right panes).

The main agent (top-left) with its newly spawned sub-agents The main agent will first divide the news feeds into three groups. Then, it’ll spawn three sub-agents, each assigned to a group of news feeds. In the demo, these sub-agents are displayed as a separate tmux window for each sub-agent. Then, the sub-agents will process their assigned news feeds and generate summaries for each of them. The sub-agent also categorizes stories within each feed, such as AI/ML, technology, and business. Throughout this process, the sub-agents displays status updates. When the sub-agent finishes processing it’s assigned feeds, it displays a final completion update. While the sub-agents are processing their assigned feeds, the main agent monitors their progress. When all sub-agents are done, the main agent reads the individual feed summaries and synthesizes them into a final summary. 3-minute demo of how it worksHere’s a three minute demo to get a better understanding of how it works (1080p resolution). We’ll process the following six news feeds. The code is available here: news-agents. |

Eugene Yan

I build ML, RecSys, and LLM systems that serve customers at scale, and write about what I learn along the way. Join 7,500+ subscribers!

Hey friends, After repeating myself for the nth time on how to build product evals, I figured I should write it down. There are three basic steps: (i) labeling a small dataset, (ii) aligning our LLM evaluators, and (iii) running the experiment + evaluation harness with each config change. I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web version has extras & images) 👈 First, label some data It begins with sampling...

Hey friends, What makes an effective principal engineer or scientist? I’ve distilled what I’ve observed from role models and quoted some of their advice below. While my perspective is Amazon-centric, these ideas should also apply to most principal tech IC roles. As always, use your best judgment and assess if this advice applies to you and your situation. I appreciate you receiving this, but if you want to stop, simply unsubscribe. 👉 Read in browser for best experience (web version has extras...

Hi friends, I got nerdsniped when I first heard about Semantic IDs. The idea is simple: Instead of using random hash IDs for videos or songs or products, we can use semantically meaningful tokens that an LLM can natively understand. I wondered, could we train an LLM-recommender hybrid on the rich behavioral data that makes today’s recommender systems so effective? I appreciate you receiving this, but if you want to stop, simply unsubscribe. • • • 👉 Read in browser for best experience (web...